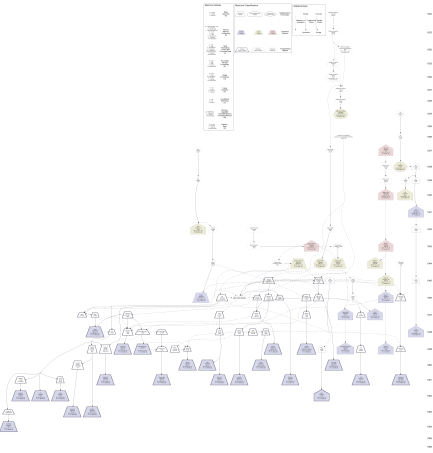

The Evolution of the Modern Computer

An Open Source Graphical History

(1934 to 1950)

This is the home of the Computer Evolution File. This file attempts to provide a comprehensive graphical representation of the evolution of the modern computer for the period 1934 to 1950. The file is licensed with an attribution, share alike creative commons license. Please feel free to download and make improvements and derivative works. Please send a copy of changes to me and I will share the updates on this page.

foobar@bigfoot.com

| Latest Version:- 0.3 released 2003-12-23 | ||

|---|---|---|

| File | Size | Description |

| ComputerEvolution_V0.3.txt | 70k | Dot file for Graphviz |

| ComputerEvolution_V0.3.png | 468k | Full size Portable Network Graphics (PNG) file |

| ||

Wikipedia Article for the term Computer

( Technology )I just re-wrote the first four sections of the Wikipedia article for the term Computer. The Current page is here.

This link to the change history page for the article currently shows one change on line 6. This was a trivial typo that I just fixed in my final version. Over time this link should change to show all the modifications to the article made by other people. I'm curious to see how signifcant the changes will be...

Charles Babbage and Howard Aiken. How the Analytical Engine influenced the IBM Automatic Sequence Controlled Calculator aka The Harvard Mk I

( Technology )In 1936, [Howard] Aiken had proposed his idea [to build a giant calculating machine] to the [Harvard University] Physics Department, ... He was told by the chairman, Frederick Saunders, that a lab technician, Carmelo Lanza, had told him about a similar contraption already stored up in the Science Center attic.

Intrigued, Aiken had Lanza lead him to the machine, which turned out to be a set of brass wheels from English mathematician and philosopher Charles Babbage's unfinished "analytical engine" from nearly 100 years earlier.

Aiken immediately recognized that he and Babbage had the same mechanism in mind. Fortunately for Aiken, where lack of money and poor materials had left Babbage's dream incomplete, he would have much more success.

Later, those brass wheels, along with a set of books that had been given to him by the grandson of Babbage, would occupy a prominent spot in Aiken's office. In an interview with I. Bernard Cohen '37, PhD '47, Victor S. Thomas Professor of the History of Science Emeritus, Aiken pointed to Babbage's books and said, "There's my education in computers, right there; this is the whole thing, everything I took out of a book."

[The Harvard University Gazette. Howard Aiken: Makin' a Computer Wonder By Cassie Furguson]

Vannevar Bush and The Limits of Prescience

( Technology )Today Vannevar Bush (rhymes with achiever) is often remembered for his July 1945 Atlantic Monthly article As We May Think in which he describes a hypothetical machine called a Memex. This machine contained a large indexed store of information and allowed a user to navigate through the store using a system similar to hypertext links. At the time of writing his essay Bush knew more about the state of technology development in the US than almost any other person. During the war, he was Roosevelt's chief adviser on military research. He was responsible for many war time research projects including Radar, the Atomic Bomb, and the development of early Computers. If anyone should ever have been capable of predicting the future it was Vannevar Bush in 1945. He is an almost unprecedented test case for the art of prediction. Unlike almost anyone else before or since Bush was actually in possession of ALL the facts - as only the head of technology research in a country at war could be.

more >>Source Code as History

( Technology )When the history of early software development is written it will be a travesty. Few historians will have the ability, and even fewer the inclination, to learn long dead programming languages. History will be derived from the documentation not the source code. Alan Turings perplexed, hand written annotation "How did this happen?" on a cutting of Autocode taped into his note book will remain a mystery.

What kind of bug would stump Alan Turing? Was it merely a typo that took a few hours to find? a simple mistake maybe? Or did the discipline of the machine expose a fundamental misconception and thereby teach him a lesson? The only way to know would be to learn Autocode.

more >>Highest Paid Keywords In Google Adsense - Free videos are just a click away